I almost didn't read this book. I had a sneaking suspicion it would just be a 300-page advertisement for Khan Academy.

I was half right. It is kind of an advertisement for Khan Academy. But it's also something more interesting: a deeply personal story on why education is fundamentally broken and how AI might - just might - be the thing that finally fixes it. After recording a podcast about it and spending way too much time thinking about its implications, I've come to believe Salman Khan is either prophetically right about the future of learning, or we're all about to witness the most spectacular educational catastrophe in human history.

The Two Sigma Problem

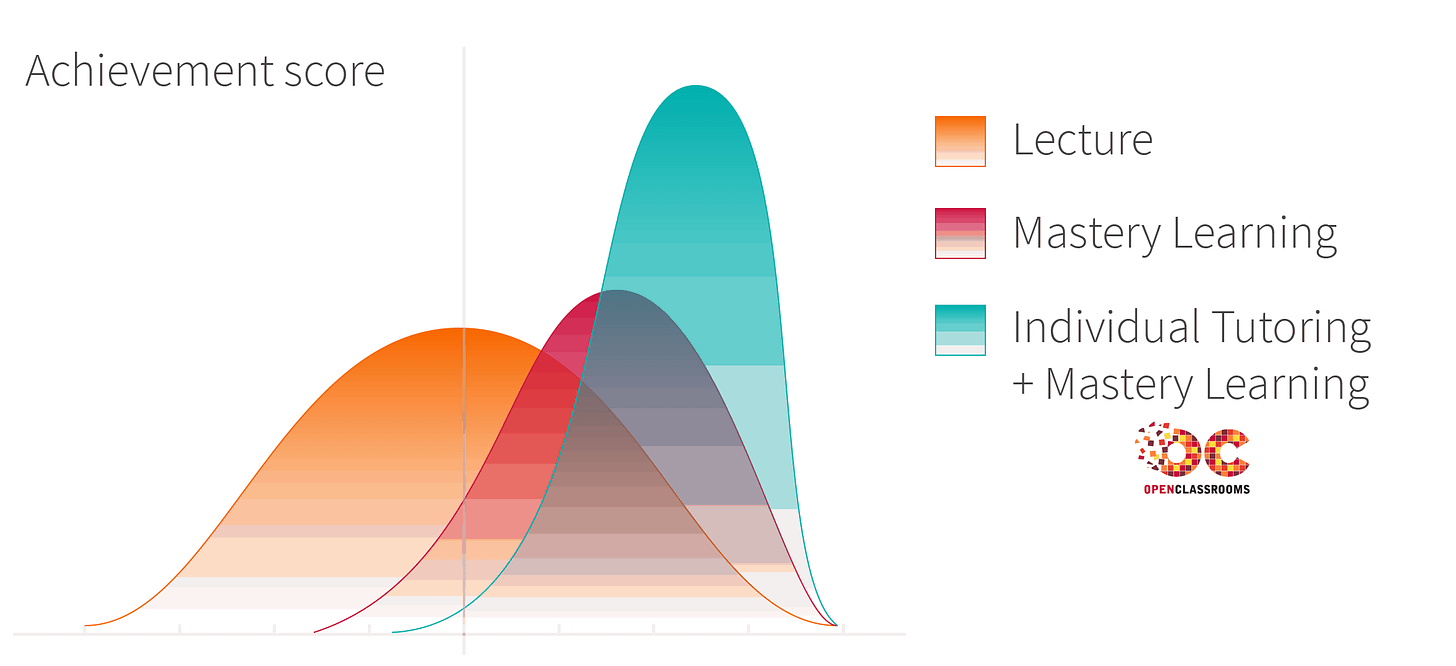

Let's start with the most important graph you've never seen:

In 1984, educational psychologist Benjamin Bloom quantified something teachers have known forever: one-on-one tutoring is magic. Students who work with a personal tutor gain what Bloom called a "two sigma" improvement - that's two standard deviations, which translates to jumping from the 50th percentile to the 96th percentile. Put another way, an average student with a tutor performs better than 96% of students in a traditional classroom.

This finding should have revolutionized education. Instead, it became known as "Bloom's Two Sigma Problem" because nobody could figure out how to scale it. You can't give Alexander the Great their own Aristotle.

So what did we do instead? Khan describes it perfectly:

In the 18th century, we began to have a utopian idea of offering mass public education to everyone. We didn't have the resources to give every student a personal tutor. So instead, we batched them together in groups of 30 or so, and we applied standardized processes to them, usually in the form of lectures and periodic assessments.

The results speak for themselves. According to a 2020 Gallup analysis, 54% of Americans between ages 16 and 74 read below a sixth-grade level. In the United States, most high school graduates who go to college don't even place into college-level math. Three-quarters lack basic proficiency in writing.

In Detroit, before COVID, 6% of eighth-graders were performing at grade level. After COVID? 3%.

Let that sink in. We've built an educational system where 97% of students in one of America's major cities are failing to meet basic standards. If this were a medical treatment with a 3% success rate, we'd ban it. If it were a bridge with a 3% chance of not collapsing, we'd demolish it. But because it's "just" education, we shrug and blame the teachers, or the parents, or the kids themselves.

Enter the Machines

This is where Khan's story gets interesting. In early 2023, before ChatGPT-4 was even publicly released, Sam Altman and Greg Brockman from OpenAI visited Khan Academy. They let Khan test their new model. His immediate reaction: "This changes everything."

Why? Because for the first time in human history, we might have technology capable of providing every student with their own personal tutor. Not a perfect tutor, not Aristotle, but something that could work with students "at their own time and pace" - the key ingredient in Bloom's formula.

The education establishment's response was swift and predictable: ban it.

Los Angeles Unified became the first major school district to prohibit ChatGPT. Seattle followed. Then New York City, Fairfax County, Montgomery County. The reasons were always the same: it enables cheating, it doesn't build critical thinking skills, it will destroy writing ability.

One professor quoted in Inside Higher Ed declared ChatGPT "a plague, one that threatens our minds more than our bodies."

Meanwhile, in China, the government mandated AI education starting in first grade.

At our reflexive technophobia, our inability to see past the immediate disruption to the transformative potential. We're like 19th-century lamplighters campaigning against electricity because it'll put them out of work.

The Cheating Problem That Isn't

The cheating argument particularly irritates me because it's both the most common objection and the most obviously wrong. As Khan points out, academic dishonesty isn't new:

In 2019—more than three years before ChatGPT was publicly released—The New York Times profiled college graduates in places like Nigeria and Kenya making a living writing essays for college students in America and other wealthy countries.

A quick web search for "write my research paper for me cheaply" reveals a thriving industry. In Silicon Valley, parents pay $400 an hour for college admissions coaches who essentially write their kids' essays. One study found 47% of students think using websites to find homework answers is "somewhat or very acceptable."

The cheating horse hasn't just left the barn - it's galloping across the next county. Banning ChatGPT is like banning calculators because students might use them on arithmetic tests. It completely misses the point.

Here's my hot take: we should allow students to submit AI-generated work. Grade it on quality. If someone uses ChatGPT to write garbage, give them an F. If they craft brilliant prompts that produce exceptional work, give them an A.

Because here's the thing: prompting is writing. It's thinking. It's the same cognitive process of structuring ideas and arguments, just with a different interface.

What AI Tutoring Actually Looks Like

But Khan isn't primarily interested in AI as a writing tool. He's interested in AI as a tutor. And the examples he provides are genuinely mind-blowing.

Take Saanvi, a ninth-grader in India reading The Great Gatsby. She's stuck on why Gatsby keeps staring at the green light. Instead of Googling for CliffsNotes, she opens Khanmigo (Khan Academy's AI tutor) and asks to speak with... Jay Gatsby himself.

JAY GATSBY: Ah, the green light. It is a symbol of my dreams and desires. You see, it is situated at the end of Daisy Buchanan's dock, across the bay from my mansion. I gaze at it longingly, as it represents my yearning for the past and my hope to reunite with Daisy, the love of my life.

She literally has a conversation with the character. At one point, she apologizes for taking up his time, and "Gatsby" gently reminds her he's just an AI simulation.

This isn't just a gimmick. It's a fundamental shift in how we can engage with knowledge. Instead of passive consumption - reading about historical figures or literary characters - students can have active dialogues with simulations of them. Imagine debating philosophy with Socrates, discussing physics with Einstein, or co-writing Federalist Papers with Hamilton.

Khan tells another story about using Khanmigo to explore the Second Amendment. He types: "Why do we have the Second Amendment? It seems crazy!"

Instead of lecturing or taking a political stance, the AI responds: "Why do you think the Founders included the Second Amendment to begin with?"

It's Socratic. It pushes the student to think deeper without imposing its own views. Try getting that kind of neutrality from a human teacher on gun control.

The Inequality Time Bomb

Here's where Khan's argument gets urgent. Educational inequality isn't just unfair - it's an existential threat. He calls it a "global education inequality time bomb," and the numbers back him up:

Louisiana spends $10,000 per student per year; New York spends $40,000

In India, government schools spend between $500-$1,200 per student

25% of teachers in India are absent from school on any given day

Worldwide, girls are twice as likely to never set foot in a classroom

This inequality compounds over generations. Affluent families hire tutors, use test prep services, pay for college consultants. Their kids get the two-sigma boost. Everyone else falls further behind.

AI could be the great equalizer. For the first time, a kid in rural Louisiana or urban Detroit or a village in India could have access to the same quality of personalized instruction as a billionaire's child in Silicon Valley.

But only if we let them use it.

The Jobs Apocalypse (Or Not)

Khan devotes significant space to the "what about jobs?" question, and his answer is both optimistic and terrifying. Early studies from Wharton show 30-80% productivity improvements on white-collar analytical tasks using AI. Entry-level positions in tech, consulting, finance, and law are evaporating.

His solution? We need to "invert the labor pyramid." Instead of lots of people doing routine tasks supervised by a few doing creative work, everyone needs to become what Daniel Priestley calls "high agency generalists" - people with broad skills who can orchestrate AI to solve problems.

Khan writes:

We are entering a world where we are going back to a pre-Industrial Revolution, craftsmanlike experience. A small group of people who understand engineering, sales, marketing, finance, and design are going to be able to manage armies of generative AI and put all of these pieces together.

This is either incredibly exciting or utterly terrifying, depending on whether you think we can retrain billions of people fast enough. Khan, ever the optimist, thinks we can - but only if we completely reimagine education starting right now.

Star Trek vs. Blade Runner

The book's most thought-provoking section explores two possible futures. In one, we get Star Trek: a post-scarcity society where everyone is educated, creative, and free to explore their potential. In the other, we get a dystopia of mass unemployment, purposelessness, and susceptibility to demagogues.

Khan doesn't mince words:

If we don't, societies will increasingly fall prey to populism. People with time but no sense of purpose or meaning don't tend to be good for themselves or others.

The difference between these futures? Whether we embrace AI in education now or continue fighting it.

The Under-Discussed Revolution

After finishing the book and recording my podcast, I actually think Khan understates how revolutionary AI will be for education. He focuses mainly on AI as a better delivery mechanism for traditional learning. But what if the whole concept of "learning" is about to change?

Consider: if AI can write, code, and analyze better than most humans, why learn these skills at all? It's like teaching kids calligraphy after the invention of the printing press.

Khan touches on this briefly, quoting Bill Gates on the "confounding paradox" - we now have tools that make learning easier, but they also make people wonder if they need those skills at all.

I think the answer is that we'll need to completely reconceptualize what education is for. Not to make us economically valuable (AI will do that better), but to make us more human. To help us ask better questions, think more creatively, connect ideas across domains.

In other words, education needs to make us the kind of people who can work with AI rather than be replaced by it.

My Verdict

Is Brave New Words just an advertisement for Khan Academy? Kind of.

But he's also right about the fundamental problem: our education system is catastrophically broken, we know exactly how to fix it (personalized tutoring), and AI might finally make that fix scalable.

The question isn't whether AI will transform education. It's whether we'll let it transform education in time to matter. Every day we spend banning ChatGPT instead of integrating it is another day China pulls ahead, another cohort of students falls behind, another step toward the dystopia instead of the Star Trek future.

Khan ends with this:

This is not a drill. Generative AI is here to stay. The AI tsunami has drawn back from the shore and is now barreling towards us. Faced with the choice between running from it or riding it, I believe in jumping in with both feet.

After reading this book, recording a podcast about it, and spending way too much time thinking about its implications, I'm convinced he's right. The tsunami is coming whether we like it or not.

The only question is: will we teach our kids to surf?

Note: This review is based on the May 2024 edition of "Brave New Words." Given how fast AI is evolvinome of Khan's predictions may already be outdated. Then again, they might be too conservative. We're living in exponential times.